Mirai: On-Device Inference to Unlock AI for All

Our investment in Mirai

Today we’re thrilled to announce our partnership with Dima Shvets and Alexey Moiseenkov and the Mirai team, the fastest on-device inference engine for Apple Silicon.

Founders Who’ve Built at Scale Before

Dima and Alexey are repeat founders building from deep personal experience at the intersection of consumer AI and on-device inference. Dima previously launched Reface, a generative AI app that reached over 300 million users, and Alexey built Prisma, which hit 100 million MAU and was named App of the Year on both the App Store and Google Play (notably, Prisma was a pioneer in running convolutional neural network inference entirely on-device).

They know what it means to scale to hundreds of millions of people, and know the pains of shipping real-time AI systems along the way. And it is the hard earned insights from those experiences that guide them. Two of these stand out as key drivers as they build Mirai:

For technology to be truly accessible, it must be close to free and instant, on the devices people already use

People love when AI feels personal

The People Demand “Personal, Fast, and Cheap”

Everyone is talking about frontier AI models – billions of parameters, massive context windows, the race to AGI. But most people in the world will not interact with AI through a $20/month subscription to a cloud chatbot. They’ll interact with AI on the devices already in their hands, through experiences that feel instant, personal, and nearly free.

We’ve seen this pattern before. The internet became transformative not when it was powerful, but when it was everywhere and essentially free. Streaming didn’t win because of catalog size alone, it won because latency dropped to the point where you forgot there was a data center involved. AI is heading the same direction. The frontier will keep moving forward, but the distilled models, the “flash” variants, the smaller specialized models that carry the best of today’s capabilities into tomorrow - these are the models that will reach billions of people. And they need to run on-device.

For the first time, dedicated AI silicon - CPUs, GPUs, Neural Engines - is standard in consumer hardware. Every phone and laptop shipping today has meaningful AI compute built in. But accessing that compute is hard, at least in part due to Apple and Google’s stranglehold on what APIs to expose. The same model behaves completely differently on CPU vs. GPU vs. NPU. Performance is dominated by memory and bandwidth constraints. There is no such thing as “generic inference” on-device. It’s a “three-body problem” where model, runtime, and hardware must co-evolve. You only get predictable latency, cost, and privacy if you control the runtime.

In addition, as AI proliferates, cloud inference costs are accelerating, particularly for latency-sensitive workloads like voice and code completion. Running these on-device eliminates per-inference costs and improves latency.

That’s where Mirai comes in.

On-Device Inference

Customers already run AI in the cloud. By extending their pipeline to devices, Mirai provides execution infrastructure that lets model makers and developers bring their products to Apple Silicon without needing to solve the hardest low-level optimization problems themselves. Running on-device unlocks the ability to deliver delightful AI experiences that are low-latency, private, and by definition lower cost. At that point we will truly see an explosion in development, which will drive even more dynamic AI use cases on top.

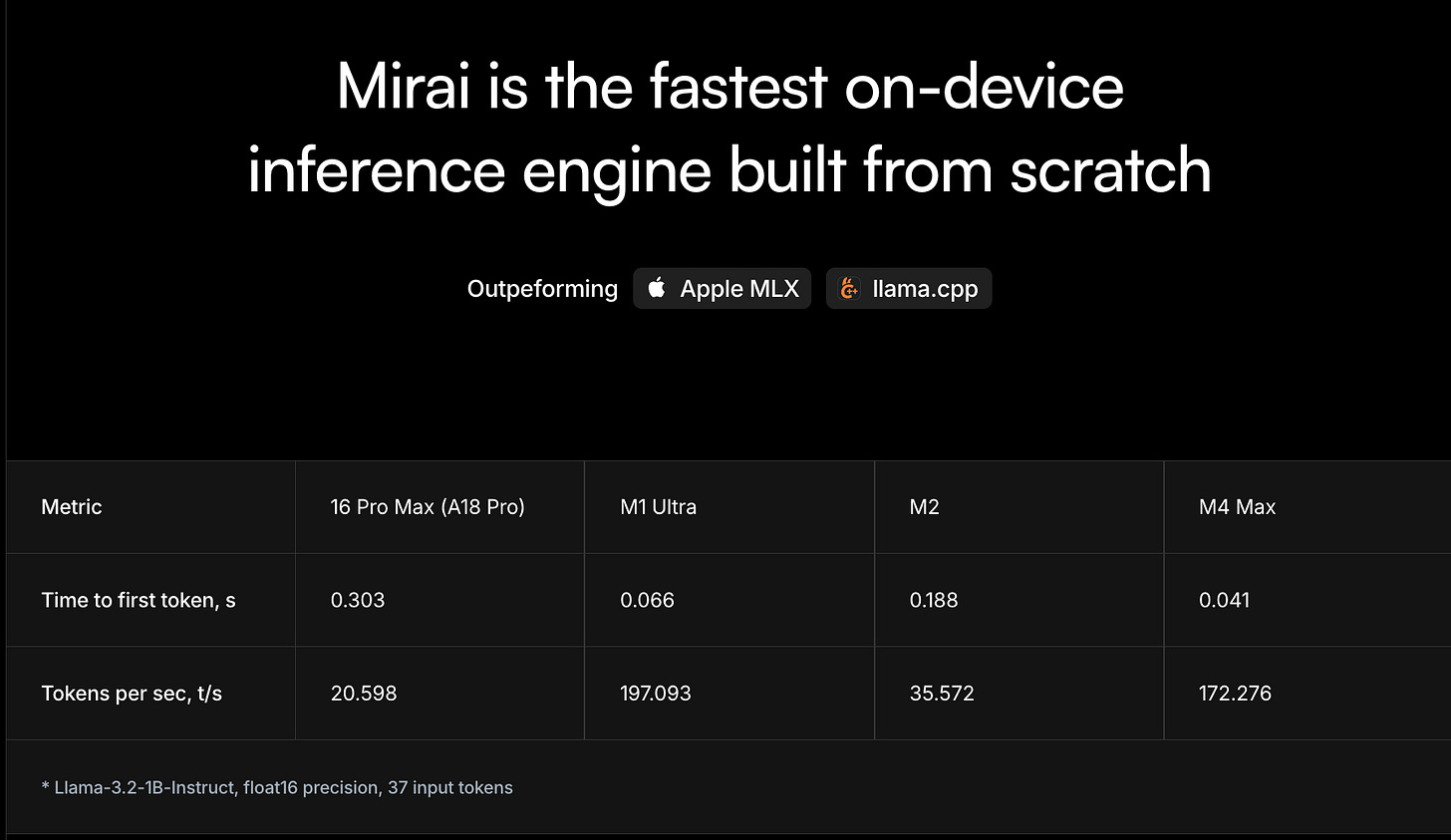

The Mirai team - all deeply technical, and with on-device expertise that is incredibly rare - built their entire inference stack from scratch, and the results speak for themselves: Mirai outperforms Apple’s own MLX framework, delivering a 37% increase in generation speed, up to 60% faster prefill on certain model-device pairs, and a significant reduction in memory usage on supported models as well.

Early partners including Baseten are already integrating Mirai to bring text, voice, and multimodal models on-device, with more on the way.

Dima and Alexey have developed a hard-earned conviction that on-device inference isn’t a nice to have, but rather a foundational layer that will unlock AI for everyone, and we’re fortunate to be along for the ride with them.

Let’s Go!

We’re proud to partner with Mirai alongside our friends at Uncork Capital, and angels from OpenAI, Hugging Face, ElevenLabs, Stripe, Runway, and Snowflake as part of the company’s $10M seed round 🚀

For more on Mirai, check out the company’s website and their documentation.

Garuda Ventures is a first check fund that partners with relentless, ambitious founders. Subscribe below to get the latest from us, and visit garuda.vc to learn more.